I'm looking into a severe LOGFLUSHQ spinlock issue in SQL Server 2019 CU16.

The issue first presented itself afaik in CU 15.

I'll probably blog more thoroughly about all that another day.

Here I just wanted to return to a detail from yesteryear.

Last time I checked was probably SQL Server 2014 - at that time each database had 128 in-memory transaction log buffers, each of which could be up to 60 kb (hence why each transaction log write could be up to 60 kb).

Since the logflushq spinlock contention is in the context of transaction log flush/write queue management, I figured I'd better check if the number of buffers had increased, or if the maximum size of those buffers had increased.

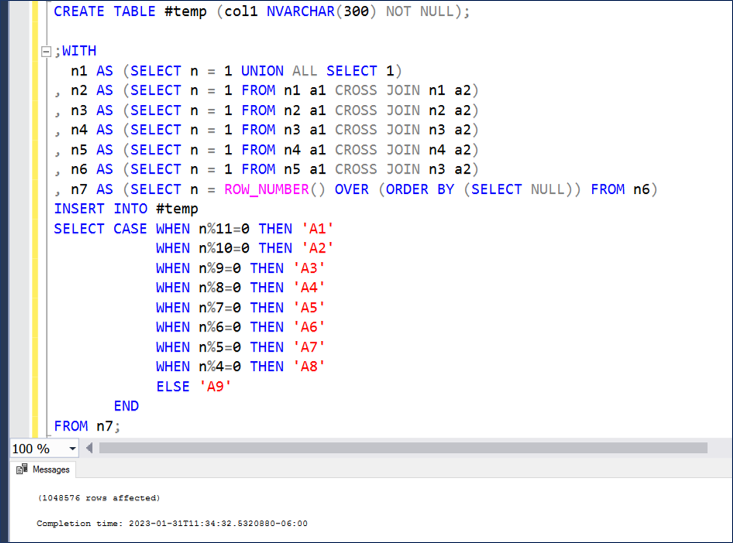

The T-SQL code I used is down below...

These results from SSMS show that SQL Server 2019 is what I am used to so far:

128 transaction log buffers, each with a max size of 60k.

And lc_state for those buffers can be retrieved from DBCC DBTABLE.

DROP TABLE IF EXISTS #table;

CREATE TABLE #table

([ParentObject] VARCHAR(255),

[Object] VARCHAR(255),

[Field] VARCHAR(255),

[Value] VARCHAR(255)

);

DECLARE @sqlT NVARCHAR(1000)=

'dbcc dbtable(' + quotename(db_name()) + ') with tableresults, no_infomsgs';

INSERT INTO #table

EXEC (@sqlT);

SELECT SQLServer_Version = @@version;

SELECT ParentObject

FROM #table

WHERE LEFT(ParentObject,

CASE WHEN PATINDEX('% @%', ParentObject) > 2

THEN PATINDEX('% @%', ParentObject) - 1 ELSE 1 END) = 'LogMgr'

GROUP BY ParentObject;

WITH LogMgr AS

(SELECT ParentObject

FROM #table

WHERE LEFT(ParentObject,

CASE WHEN PATINDEX('% @%', ParentObject) > 2

THEN PATINDEX('% @%', ParentObject) - 1 ELSE 1 END) = 'LogMgr'

GROUP BY ParentObject),

LogMgr_objects AS

(SELECT OBJECT_TYPE = LEFT(t.Object,

CASE WHEN PATINDEX('% @%', t.Object) > 2

THEN PATINDEX('% @%', t.Object) - 1 ELSE 1 END),

t.object

FROM #table t

JOIN LogMgr ON t.ParentObject = LogMgr.ParentObject

GROUP BY LEFT(t.Object,

CASE WHEN PATINDEX('% @%', t.Object) > 2

THEN PATINDEX('% @%', t.Object) - 1 ELSE 1 END), t.object)

SELECT LO.OBJECT_TYPE, object_count = COUNT(*)

FROM LogMgr_objects LO

GROUP BY LO.OBJECT_TYPE;

SELECT t.Field, t.value, NUM_log_buffers = count(*)

FROM #table t

WHERE t.object LIKE 'LC @0x%'

AND t.field = 'lc_maxDataSize'

GROUP BY t.field, t.value;

SELECT t.Field, t.value, NUM_log_buffers = count(*)

FROM #table t

WHERE t.object LIKE 'LC @0x%'

AND t.field = 'lc_state'

GROUP BY t.field, t.value;